We have Citrix PVS 7.1 setup across 5 data centres in 3 geographically disperse regions with 2 primary sites each having a primary datacenter and a DR datacenter. Each datacenter has high-speed local share for our PVS images. Our Active Directory architecture is 2003 level. Our PVS setup is configured to stream the vDisks from a local file share. This information is important to add context for our process.

I found an issue in one of our datacenters when we had an issue and had to reboot some VM's. Essentially the issue is our target devices were booting slow. Like really, really, really slow. In addition to that, once they did boot; logging into them was slow. Doing any action once you were logged in was slow. But it did seem to get faster the more you click'ed and prodded your way around the system. For instance, clicking the 'Start' button took 15 seconds to open the first time but was normal afterwards, but clicking on any sub-folders was slow with the same symptoms.

|

| This is a poor performing VM. Notice the high number of retries, slow Throughput and long Boot Time |

Conversely, connecting to a target device at the other city showed these statistics:

|

| Example of a much, much better performing VM. 14 second boot time, throughput about 10x faster than the slower VM and ZERO retries. |

So we have an issue. These two separate cities are configured nearly identically but one is suffering severely in performance. We looked at the VMHost that it the VM was hosted at, but moving it to different hosts didn't seem to make any difference. I then RDP'ed to our VM and launched ProcessMonitor so I could watch the bootup process. This is what I saw:

chfs04 is the local file server hosting our vDisk. citrixnas01 is a remote file server on a WAN connection in the datacenter in the other city. For some reason, PVS is reading and sharing the *versioned* avhd file that resides on the local file share, but the base VHD file it is reading from citrixnas01. This is, obviously, a huge issue. Reading the base image over a WAN probably will result in the poor performance we are experiencing and the high retry counts for packets.

But why is it going over the WAN? It turns out that the avhd file contains a section in it's header that describes the location of the parent VHD file. PVS is simply using the native sequence built in to the VHD spec for the chain'ed disks.

|

| Hex editing the avhd file reveals the chain path |

(Un)Fortunately for us, our PVS servers can read the file share across the WAN and pull and cache data locally so the speedups tended to gain the longer the VM was in use. In order to fix this issue immediately, we edited our hosts file on the PVS server to point to the local file server for citrixnas01.

After executing that change I rebooted one of the target devices in the *slow* site.

|

| Throughput is now *much* better, but the number of retries is still concerning |

Now, the tricky thing about this issue is we thought we had it covered because we configured each site to have it's own store:

Our thoughts were that this path is what the PVS service would look for when trying to find VHD's and AVHD's. So when it would look for XenAppPn01.6.vhd it would look in that store. Obviously, this line of thinking is not correct. So it is important if you have sites that are distant that the path you use to create your version will correspond to the fastest, local, share in all your sites. For us, our folder structure is identical in all sites so creating a host file entry pointing citrixnas01 to the local file share works in our scenario to start with.

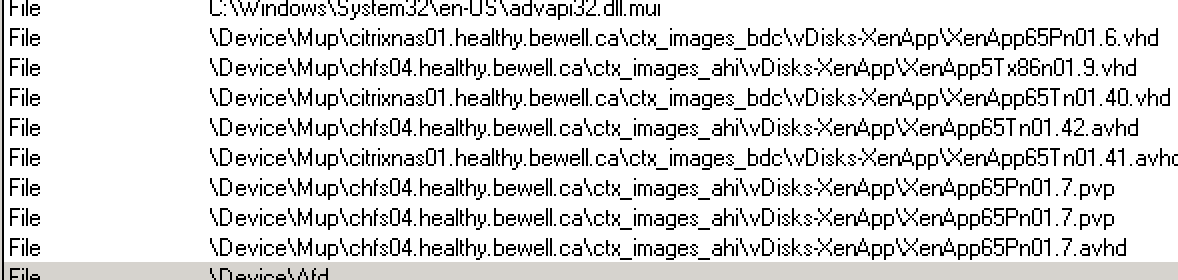

EDIT - I should also note that you can see the incorrect file paths in process explorer as well. Changing the host file wasn't enough, we also needed to restart the streaming service as the streaming service held cached data on how to reach the files across the WAN. Process Explorer can show you the VHD files that the stream service has access to and where (under files and handles):

|

| Citrixnas01 shouldn't be in there... Host file has been changed... |

After a streaming service restart:

|

| Muuuuch better. |

1 comment:

I use Subnet Affinity set to fixed, under load balancing parameters of the VHD. That forces the Target Device to use a PVS server in the same subnet, thus you dont get the problems you describe. Of course each site would need to have its own subnet but I would guess they already have :)

Post a Comment